Katrina AshtonI am a PhD student at the University of Pennsylvania, in the Department of Computer and Information Science, where I work on computer vision for robotics. I am co-advised by Prof. Kostas Daniilidis and Prof. Bernadette Bucher. I have done interships with the Robotics and AI Institute and Seeing Machines. I received a Bachelor of Engineering (R&D, Hons.) in Mechatronics and a B.S. in Mathematical Modelling from the Australian National University, where I also worked as a research assistant with Prof. Jochen Trumpf and at the 3A Institute. |

|

ResearchI'm interested in computer vision, machine learning and robotics. In particular, I mainly work on scene representations for robotics, along with modular and interpretable policies for performing tasks using these scene representations. |

|

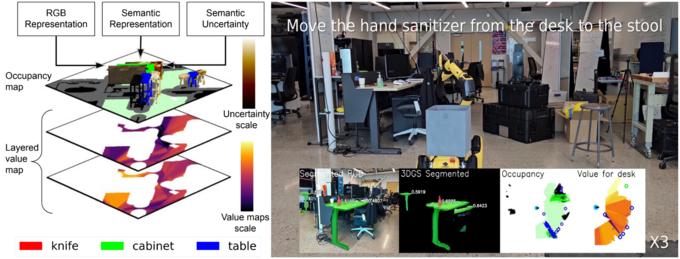

HELIOS: Hierarchical Exploration for Language-grounded Interaction in Open ScenesKatrina Ashton, Chahyon Ku, Shrey Shah, Wen Jiang, Kostas Daniilidis, Bernadette Bucher arXiv, 2025 arxiv / website / A zero-shot method for language-specified pick and place tasks by reasoning over a hierarchical scene representation with a global search policy to balance exploration and exploitation and an uncertainty-aware object score to allow us to only interact with objects after enough views have been captured to be confident of their class |

|

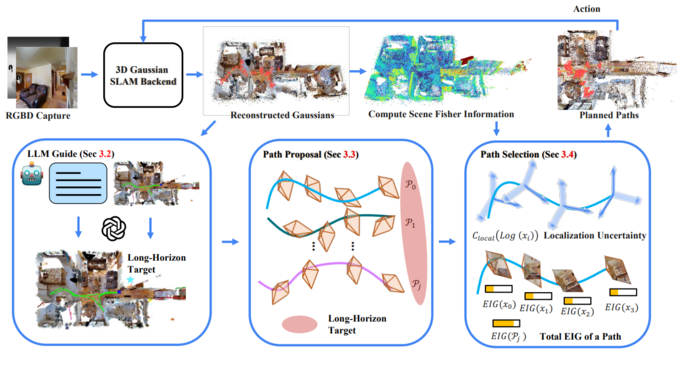

Multimodal LLM Guided Exploration and Active Mapping using Fisher InformationWen Jiang*, Boshu Lei*, Katrina Ashton, Kostas Daniilidis ICCV, 2025 arxiv / An active Gaussian Splatting SLAM system with active exploration strategy which balances the accuracy of mapping and localization |

|

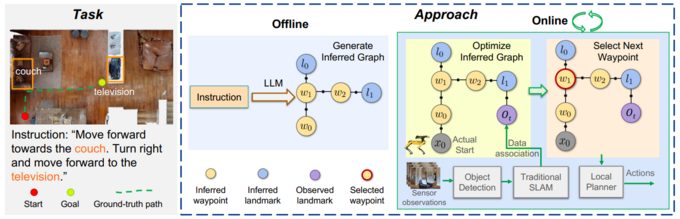

Zero-shot Object-Centric Instruction Following: Integrating Foundation Models with Traditional NavigationSonia Raychaudhuri, Duy Ta, Katrina Ashton, Angel X. Chang, Jiuguang Wang, Bernadette Bucher arXiv, 2025 arxiv / website / A zero-shot method to ground natural language instructions to a factor graph and a policy to use this for object-centric Vision-and-Language Navigation |

|

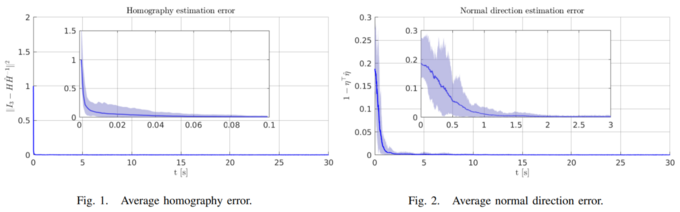

Equivariant Filter for Feature-Based Homography Estimation for General Camera MotionTarek Bouazza, Katrina Ashton, Pieter van Goor, Robert Mahony, Tarek Hamel Conference on Decision and Control (CDC), 2024 paper / Homography estimation for arbitrary trajectories using the Equivariant Filter framework by exploiting the Lie group structure of SL(3) |

|

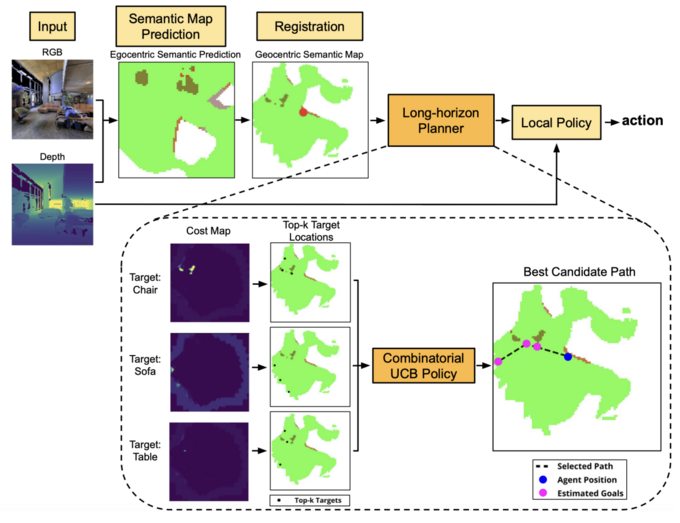

Unordered Navigation to Multiple Semantic Targets in Novel EnvironmentsBernadette Bucher*, Katrina Ashton*, Bo Wu, Karl Schmeckpeper, Nikolai Matni, Georgios Georgakis, Kostas Daniilidis CVPR Embodied AI Workshop, 2023 paper / Proposing an objective to extend an objectnav method to unordered multi-object navigation |

|

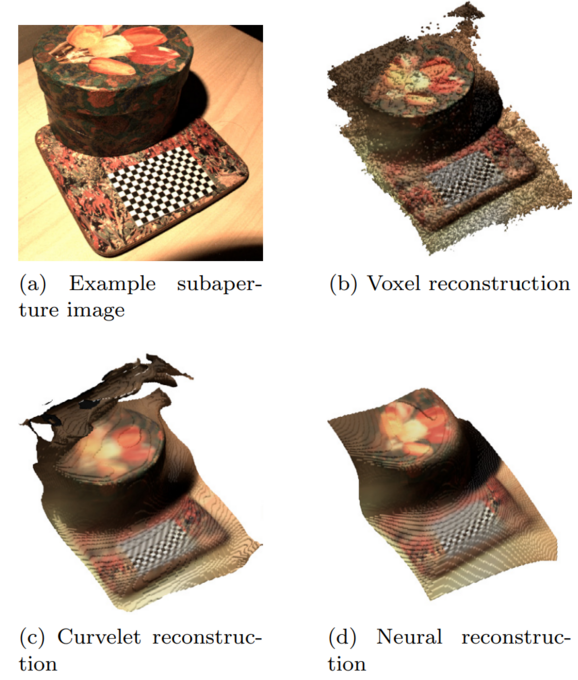

An observer for infinite dimensional 3d surface reconstruction that converges in finite timeSean G. P. O’Brien, Katrina Ashton, Jochen Trumpf IFAC-PapersOnLine, 2020 paper / An observer for reconstructing the dense structure of scenes from visual or depth sensors that provably converges in finite time, demonstrated with real light-field camera data |

|

Design and source code from Leonid Keselman's website |